Prompt Engineering Notes

LLMs and Prompt Engineering

Introduction

It has been almost two years since the introduction of ChatGPT, and it has taken the world and startup community by storm. Since then, every business is trying to find an opportunity to leverage LLM capabilities to optimize their work or decision-making in some way. There are many groundbreaking developments emerging over time. My overall focus here will be on the basics of LLMs and prompt engineering. I am still learning and exploring this world, and through articles like this, I hope to share a few insights I’ve gained while creating a couple of LLM-based prototypes.

This article will cover the basics of prompt engineering and LLMs.

What is LLM?

LLM stands for Large Language Model. Let me simplify it further:

LLM is a system that takes an input string and generates an output string based on the probability of the next word.

As shown in the above diagram, i would like to introduce you to 2 important terms:

-

Prompt: Any input which is passed to the LLM is called a prompt.

-

Response/Completion: The output generated by the LLM is called the response or completion.

History and Basics of GPT

All these innovations stem from a model architecture known as “Generative Pretrained Transformer.”

The state-of-the-art GPT models we use today are usually created through a two-step process:

- Using a pre-trained GPT model as a base (Creating an LLM from scratch requires significant time, resources, and compute, so a base model is generally used as a starting point).

- Applying fine-tuning on top of the trained model.

To give a high-level perspective, the first GPT was trained on 4.5 GB of data, while GPT-2 was trained on 40 GB. Usually, these datasets come from various sources, including books, documents, websites, blogs, forums, and more. Web scraping is heavily used to obtain large volumes of text data for training. ChatGPT is a generally trained LLM with knowledge from multiple sources, but for a specific domain, the dataset will consist of data specific to that domain.

The goal of the model is to mimic patterns based on the training data. LLMs understand patterns, logic, and reasoning to answer questions. They perform well on the data they have been trained on and less effectively on data outside the training set (especially proprietary enterprise data).

Prompts

Prompt is the input given to the LLM.

Here are four components which creates a prompt.

- Instructions - Actual task information.

- Context - Additional task related information (Optional)

- Input Data - data on which task needs to be performed

- Output Indicator - How do you want your output to be

Example:

Act as a language expert performing sentimental analysis. Your job is to analyse the comment and assign either positive, negative or neutral sentiment along with score. Here is the comment:

'''

UO has lots of new fashion trends always.

The downtown broadway store was transformed from a 1920-30’s classic theater, so looked retro and spacious atmosphere.

Good quality, trendy clothings and accessories. However more expensive than other street wear stores like Tillys, PacSun, Zumiez.

I recommend if your budget is enough.

Parking is not easy.

Street parking, or public parking $20~40/day- expensive- in downtown.

'''

OUTPUT MUST BE IN A VALID JSON WITH THE FOLLOWING KEYS.:

1. text (contains the actual comment)

2. sentiment (either positive, negative or neutral)

3. score(Score from 0-10)

Here is my output from LLM (gpt-4o-mini)

{

"text": "UO has lots of new fashion trends always.\nThe downtown broadway store was transformed from a 1920-30’s classic theater, so looked retro and spacious atmosphere.\n\nGood quality, trendy clothings and accessories. However more expensive than other street wear stores like Tillys, PacSun, Zumiez.\n\nI recommend if your budget is enough.\n\nParking is not easy.\nStreet parking, or public parking $20~40/day- expensive- in downtown.",

"sentiment": "neutral",

"score": 6

}

I will cover these components in depth when I start integrating these prompt techniques with openai apis. That will help in understanding these concepts in an easier way.

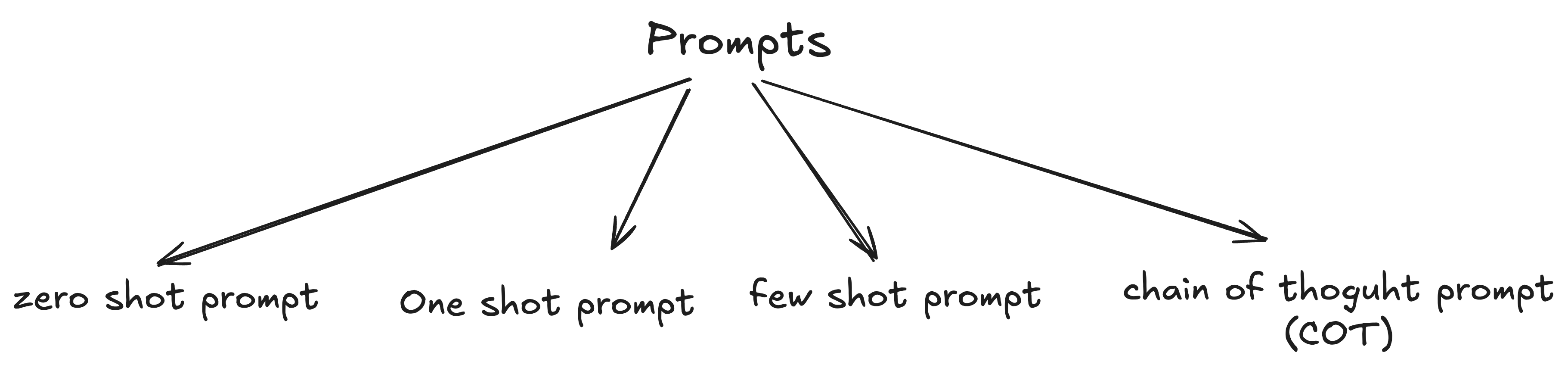

Types of prompts:

- Zero Shot Prompts

- No Examples provided

- No Input Data

- Relies on the model’s knowledge

- Most commonly used

Example: How to perform sum on col a and col b in google sheet?

- One Shot Prompts

- one Examples provided Example:

Classify the sentiment of the following text as positive, negative, or neutral.

Text: The product is terrible.

Sentiment: Negative

Text: I think the vacation was okay.

Sentiment:

- Few Shot Prompts

- At least one Examples provided Example:

Classify the sentiment of the following text as positive, negative, or neutral.

Text: The product is terrible. Sentiment: Negative

Text: Super helpful, worth it Sentiment: Positive

Text: It doesnt work!

Sentiment:

- Chain of Thought(COT) Prompts

- Improves model’s reasoning ability

- It allows to decompose the problem into smaller steps to get to the result Example:

I want to paint my bedroom walls. The room is 12 feet long, 10 feet wide, and 8 feet high. There are two windows, each 3 feet by 4 feet, and one door that is 3 feet by 7 feet. How much paint do I need to buy? Please explain your reasoning step by step.

Share this post

Twitter

Reddit

LinkedIn

Pinterest

Email